embracing privacy at habu/liveramp

With regulations and data privacy laws picking up steam, the need for private data computation and analytics has taken a front seat. Looking at the current outlook of many companies, data governance is a hot - 🥵 - hot topic. And for companies operating in global markets, there’s the added pressure of adhering to regional as well as cross-border data transfer laws.

To give you an example, there are US state laws(CPRA in California, and UCPA in Utah). Then there’s the EU’ GDPR, and India’s Digital Personal Data Protection Act 2023 (DPDPA) to name a couple. AND If that was not enough, there are frameworks in place that regulate the flow of data between borders. The latest example of this is the EU-US Data privacy framework. Honestly, as an organization trying to run a profitable business, it’s incredibly difficult and important to navigate the aforementioned laws correctly.

With this context in mind, we at Habu (recently acquired by LiveRamp) were keen on solving a privacy problem at the root level, from an engineering perspective. Well, Habu is a data collaboration platform, which allows two(or more) parties to come together and draw insights and heuristics from their data. That’s the simplest way I can explain Habu honestly.

So if I have two parties bringing in their datasets, how do I calculate what the overlap of data is?

Well, simple right, an age-old technique - SQL✨

SELECT unique_identifier, count(*)

FROM my_dataset JOIN your_dataset

ON unique_identifier

GROUP BY unique_identifier;

Looks like a simple query, helps me draw insights, and ruins the mood of all regulators and privacy advocates under the sun.

Well here are a couple of issues that I can think of:

- There’s a

unique_identifierat the output that can pinpoint an individual. - Assuming there’s no preprocessing, there probably exists other PII in the output.

While the two issues mentioned above can be solved with encryption of the unique identifier and and then projection constraints on PII columns and with some kind of analysis rules in place, it does sound tedious. Doable, but tedious.

One question that still tickles my brain. So if one of the high contributing individuals(aka one of the unique identifiers which are contributing say 200 rows in a 500-row joined dataset) is taken away from one of the datasets, wouldn’t that significantly affect the count? Would that not make it easy to point out who that person is? Does that not make it too easy to target and bombard an individual with ads? SCARY 🤯

To solve this problem, we turn to more fundamental and formal guarantees of privacy, for individuals, at the row level in a dataset. Enter Differential Privacy 🥳.

I am not going to focus tremendously on Differential Privacy as I am not an academic, but here’s a link to a cool (and more importantly, layman’s) explanation of DP. To showcase, in a simple gif, we can visualize DP as:

Using DP, we designed a step-by-step protocol for implementing privacy at the row level for individuals, while ensuring that there exists some kind of stability on JOINs, noise addition as well and final outputs. Drawing inspiration from one of the seminal works on privacy and its intersection with databases, we designed our software to provide flexibility to run custom-defined query sets, while enforcing Differential Privacy at the output of SQL queries.

Designing a practical DP workflow was a challenge, to say the least, but a mighty fun problem to solve. This did not mean just implementing the code for generating Private SQL for datasets. It involved designing the entire application layer, budget accounting framework, and access controls for setting up the environment for collaboration and implementing it within a (very complex) microservice-oriented codebase.

What DP does is very simple for the end-user to understand. Let’s take the same example before.

SELECT unique_identifier, count(*)

FROM my_dataset JOIN your_dataset

ON unique_identifier

GROUP BY unique_identifier;

Assuming that we now have preprocessing steps in place, and projection constraints are ignored here. Consider the same case where a unique identifier is contributing 200 rows in a 500-row joined dataset.

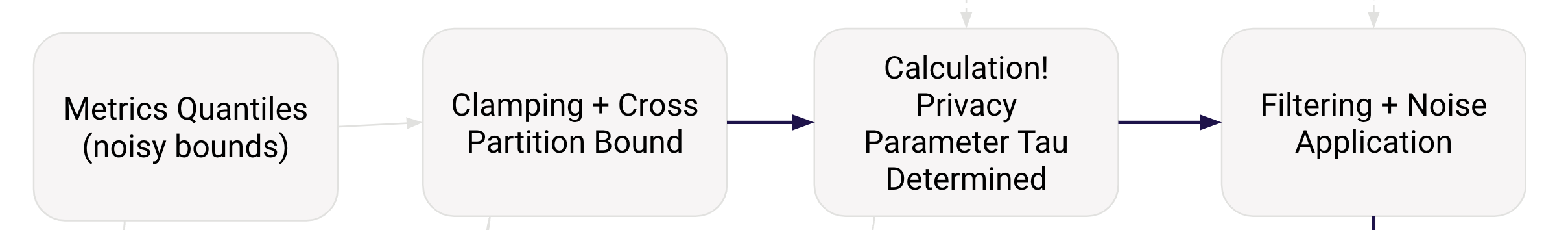

For DP, we follow a set of steps to provide formal mathematical privacy guarantees. The first step is to get the max contributions for the dataset. Max contribution is the maximum number of rows an individual contributes to a dataset. That means, in the example, the Max Contribution for this dataset is 200. Max contributions are important to understand how we can scale the noise added to the final output. Using the max contribution, epsilon(privacy budget), and delta(probability of total failure) assigned for a specified SQL query run, we follow an approximately private DP algorithm to generate and enforce privacy guarantees. To understand the full flow of and explanation of each step in the algorithm, I recommend reading the research paper at full length. Here’s a summary diagram to show the flow of the algorithm, as described in the research.

And the best part about this is that we enforce privacy guarantees while maintaining flexibility for the end-users, and scaling with data size!

If you’re interested in better understanding the algorithm, here’s a few links to other blogs:

- Reservoir Sampling by Florian Tramer

- Differential Privacy for Databases by Joseph P. Near and Xi He

- CS 860 - Algorithms for Private Data Analysis by Gautam Kamath